- Tue 07 January 2020

- Data Science

- Michael Lehotay

- #data-cleaning, #time-series, #visualization

import pandas as pd

import numpy as np

import altair as alt

pd.options.display.max_columns = 40

Preliminary Review

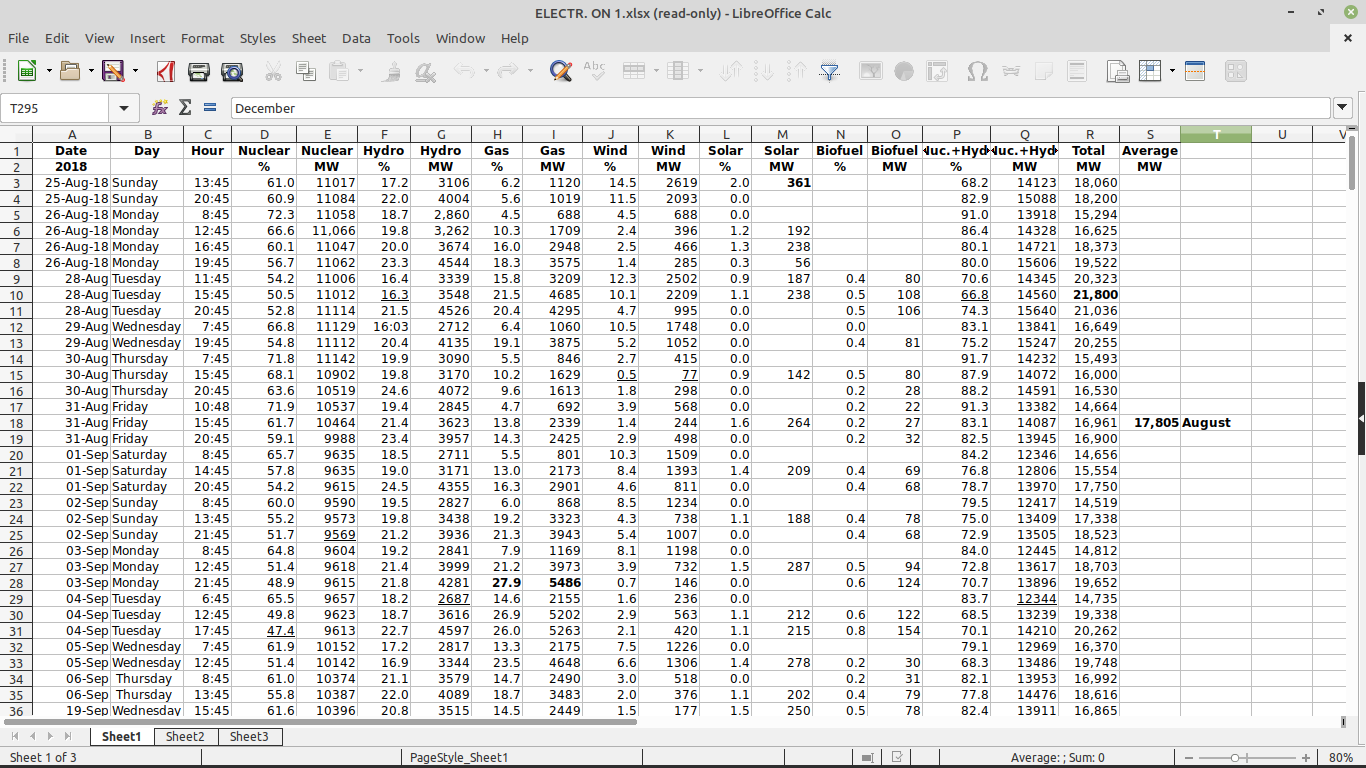

I received some excel files from my father-in-law containing information about electricity consumption in Ontario. He looked up the data on the web three times daily and manually entered it into a spreadsheet. There are three files in xlsx format. File 1 is observations from 2018, file 2 is observations from 2019, and file 3 is 1000 observations starting from August 2018 (approximately 13 months).

Loaded files in LibreOffice Calc to preview data.

Most date entries are in dd-mmm format but a few are in dd-mmm-yy.

Column headers span two rows: row 1 is name and row 2 is units. Header in file 2 is an image instead of text.

File 3 contains two sheets with data. Looks like 1st sheet is a copy of the 2018 data and 2nd sheet is combined data from 2018-08-25 through 2019-09-30.

I see at least one data entry error (cell F12 in file 1).

Files 1 and 3 contain summary statisitics: Three rows of stats at the bottom of the sheet: min, max, and avg of each column. The two rightmost columns show average daily totals per month, and name of the month.

Data Cleaning

Fixed headers to contain both name and units in one row.

Set the format of all the date columns to yyyy-mm-dd.

Some of the numbers in the data contain commas (cell E6 and all of column R). Fixed by setting excel number format to 0.0 for percent columns and 0 for MW columns.

Deleted summary statistics.

Exported all four sheets as CSV.

Diffed the exported csv files. The extra sheet in file 3 is an unchanged copy of the data from file 1. Generated checksums to verify.

!diff data/electricity1.csv data/electricity3a.csv

!cksum data/*.csv

1070850776 26838 data/electricity1.csv

4245893866 90971 data/electricity2.csv

3911391547 96667 data/electricity3.csv

1070850776 26838 data/electricity3a.csv

df1 = pd.read_csv('data/electricity1.csv') # 2018 data

df2 = pd.read_csv('data/electricity2.csv') # 2019 data

df3 = pd.read_csv('data/electricity3.csv') # ~1000 observations starting August 2018

df4 = df1.append(df2)

df4.to_csv('data/electricity4.csv', index=False) # all data in one file

print(df1.shape)

print(df2.shape)

print(df3.shape)

print(df4.shape)

(293, 18)

(1018, 18)

(1075, 18)

(1311, 18)

Feature Selection

So now we've got all 1311 records in one data frame, df4. Let's take a look at these values and see what's up with the percentages. There were no formulas in the excel files so I'm thinking these values may have been calculated by hand, or maybe transcribed from the website. In any case they are probably redundant since we have the actual MW values. Let's check the math to make sure.

Note: bad code here. will fix later.

# something is going on with the dtypes, try forcing them to be numeric

df = pd.read_csv('data/electricity1.csv', parse_dates={'iso8601': [0, 1, 2]},

dtype={'Nuclear %': np.float64,

'Nuclear MW': np.int64,

'Hydro %': np.float64,

'Hydro MW': np.int64,

'Gas %': np.float64,

'Gas MW': np.int64,

'Wind %': np.float64,

'Wind MW': np.int64,

'Solar %': np.float64,

'Solar MW': np.float64,

'Biofuel %': np.object, # fix me

'Biofuel MW': np.float64,

'Nuc.+Hyd. %': np.float64,

'Nuc.+Hyd. MW': np.int64,

'Total MW': np.int64

})

df.dropna(inplace = True)

df[((df['Nuclear MW']/df['Total MW']) - (df['Nuclear %']/100) >= 0.01) |

((df['Hydro MW'] / df['Total MW']) - (df['Hydro %'] / 100) >= 0.01) |

((df['Gas MW'] / df['Total MW']) - (df['Gas %'] / 100) >= 0.01) |

((df['Wind MW'] / df['Total MW']) - (df['Wind %'] / 100) >= 0.01) |

((df['Solar MW'] / df['Total MW']) - (df['Solar %'] / 100) >= 0.01)

#((df['Biofuel MW'] / df['Total MW']) - (df['Biofuel %'] / 100) >= 0.01) |

#((df['Nuc.+Hyd. MW'] / df['Total MW']) - (df['Nuc.+Hyd. %'] / 100) >= 0.01)

]

| iso8601 | Nuclear % | Nuclear MW | Hydro % | Hydro MW | Gas % | Gas MW | Wind % | Wind MW | Solar % | Solar MW | Biofuel % | Biofuel MW | Nuc.+Hyd. % | Nuc.+Hyd. MW | Total MW | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 18 | 2018-09-01 14:45:00 | 57.8 | 9635 | 19.0 | 3171 | 13.0 | 2173 | 8.4 | 1393 | 1.4 | 209.0 | 0.4 | 69.0 | 76.8 | 12806 | 15554 |

| 73 | 2018-10-04 15:45:00 | 60.0 | 10582 | 21.6 | 3469 | 4.5 | 683 | 6.1 | 974 | 1.9 | 289.0 | 0.2 | 27.0 | 81.6 | 14051 | 15144 |

| 99 | 2018-10-16 12:45:00 | 63.2 | 10619 | 22.3 | 3751 | 1.1 | 172 | 11.6 | 1950 | 1.7 | 251.0 | 0.3 | 52.0 | 85.5 | 14370 | 15038 |

| 143 | 2018-11-08 16:45:00 | 60.3 | 10547 | 26.3 | 4605 | 10.5 | 1835 | 3.7 | 457 | 0.1 | 25.0 | 0.2 | 28.0 | 86.6 | 15152 | 17085 |

| 237 | 2018-12-12 13:45:00 | 55.5 | 9973 | 21.7 | 3909 | 11.3 | 3034 | 11.0 | 1983 | 0.3 | 57.0 | 0.1 | 28.0 | 77.2 | 13882 | 18899 |

5 rows have miscalculated percentages, but all the other rows are calculated correctly. Probably data entry errors. Now that we've verified the percentage columns are derived from the MW columns, we can safely drop them.

df5 = pd.read_csv('data/electricity1.csv', parse_dates={'iso8601': [0, 1, 2]},

dtype={'Nuclear MW': np.int64,

'Hydro MW': np.int64,

'Gas MW': np.int64,

'Wind MW': np.int64,

'Solar MW': np.float64,

'Biofuel MW': np.float64,

'Nuc.+Hyd. MW': np.int64,

'Total MW': np.int64

})

df = df5.dropna()

df[df['Nuclear MW'] + df['Hydro MW'] - df['Nuc.+Hyd. MW'] >= 1]

| iso8601 | Nuclear % | Nuclear MW | Hydro % | Hydro MW | Gas % | Gas MW | Wind % | Wind MW | Solar % | Solar MW | Biofuel % | Biofuel MW | Nuc.+Hyd. % | Nuc.+Hyd. MW | Total MW | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 220 | 2018-12-06 10:45:00 | 56.8 | 10758 | 23.7 | 4492 | 3.0 | 575 | 16.0 | 3034 | 0.3 | 48.0 | 0.2 | 36.0 | 80.5 | 13250 | 18943 |

df = df5[['Nuclear MW', 'Hydro MW', 'Gas MW', 'Wind MW', 'Solar MW', 'Biofuel MW', 'Total MW']]

df[df['Nuclear MW'] + df['Hydro MW'] + df['Gas MW'] +

df['Wind MW'] + df['Solar MW'] + df['Biofuel MW'] - df['Total MW'] >= 1]

| Nuclear MW | Hydro MW | Gas MW | Wind MW | Solar MW | Biofuel MW | Total MW | |

|---|---|---|---|---|---|---|---|

| 18 | 9635 | 3171 | 2173 | 1393 | 209.0 | 69.0 | 15554 |

| 73 | 10582 | 3469 | 683 | 974 | 289.0 | 27.0 | 15144 |

| 82 | 10684 | 3965 | 431 | 1567 | 193.0 | 135.0 | 16795 |

| 99 | 10619 | 3751 | 172 | 1950 | 251.0 | 52.0 | 15038 |

| 139 | 10509 | 3357 | 247 | 3427 | 69.0 | 29.0 | 17391 |

| 143 | 10547 | 4605 | 1835 | 457 | 25.0 | 28.0 | 17085 |

| 237 | 9973 | 3909 | 3034 | 1983 | 57.0 | 28.0 | 18899 |

The Nuc.+Hyd. MW and Total MW columns are also derived from the other columns, so we don't need them either.

More Data Cleaning

Need to clean up all the code above, but not tonight. Read the CSV files again instead.

df1 = pd.read_csv('data/electricity1.csv', parse_dates={'iso8601': [0, 1, 2]})

df2 = pd.read_csv('data/electricity2.csv', parse_dates={'iso8601': [0, 1, 2]})

df = df1.append(df2)

Rename the columns, select just the ones we want.

df.columns

Index(['iso8601', 'Nuclear %', 'Nuclear MW', 'Hydro %', 'Hydro MW', 'Gas %',

'Gas MW', 'Wind %', 'Wind MW', 'Solar %', 'Solar MW', 'Biofuel %',

'Biofuel MW', 'Nuc.+Hyd. %', 'Nuc.+Hyd. MW', 'Total MW'],

dtype='object')

df.columns = ['Date','', 'Nuclear','', 'Hydro','', 'Gas','', 'Wind','', 'Solar','', 'Biofuel','','','']

df = df[['Date','Nuclear', 'Hydro', 'Gas', 'Wind', 'Solar', 'Biofuel']]

Deal with the missing values. It makes sense to set them all to zero.

df.isnull().sum()

Date 0

Nuclear 0

Hydro 0

Gas 0

Wind 4

Solar 827

Biofuel 596

dtype: int64

df['Wind'] = np.where(df['Wind'].isnull(), 0, df['Wind'])

df['Solar'] = np.where(df['Solar'].isnull(), 0, df['Solar'])

df['Biofuel'] = np.where(df['Biofuel'].isnull(), 0, df['Biofuel'])

Data Visualization

We are finally ready to create some awesome charts! Let's see what Altair can do with this data.

(

alt.Chart(df)

.mark_line()

.encode(x='Date', y='Nuclear')

)

That is pretty nice! Can we plot another series?

(

alt.Chart(df)

.mark_line()

.encode(x='Date', y='Nuclear')

.encode(x='Date', y='Hydro')

)

Um, no. That is just showing hydro, not nuclear. I think I need to do some reading.

[Time passes...]

It looks like Altair likes long data more than wide data. Let's make it long instead.

df = pd.melt(df, id_vars='Date', var_name='Type', value_name='MW')

df.to_csv('data/electricity5.csv', index=False)

df.head()

| Date | Type | MW | |

|---|---|---|---|

| 0 | 2018-08-25 13:45:00 | Nuclear | 11017 |

| 1 | 2018-08-25 20:45:00 | Nuclear | 11084 |

| 2 | 2018-08-26 08:45:00 | Nuclear | 11058 |

| 3 | 2018-08-26 12:45:00 | Nuclear | 11066 |

| 4 | 2018-08-26 16:45:00 | Nuclear | 11047 |

OK! I think we're ready. I've decided I want to make a stacked area chart. Let's give it a shot.

alt.Chart(df.sample(5000)).mark_area().encode(

x="Date:T",

y=alt.Y("MW:Q"),

color="Type:N"

)

Well, that was not what I had in mind. I think there is too much variability from day to day. Maybe we need to fit regressions for each of the power types, or use moving averages. I'll try the moving averages first.

#df['moving'] = df.groupby('Type').transform(lambda x: x.rolling(10, 1).mean())

#df.index = pd.date_range('1/1/2018', periods=1100)

line = alt.Chart(df.sample(5000)).mark_line(

color='red',

size=3

).transform_window(

rolling_mean='mean(MW)',

frame=[-15, 15]

).encode(

x='Date:T',

y='MW:Q'

)

points = alt.Chart(df.sample(5000)).mark_point().encode(

x='Date:T',

y=alt.Y('MW:Q',

axis=alt.Axis(title='MW'))

)

points + line

To be continued...